Amphitheater

February 21, 2025 (3 Esfand)

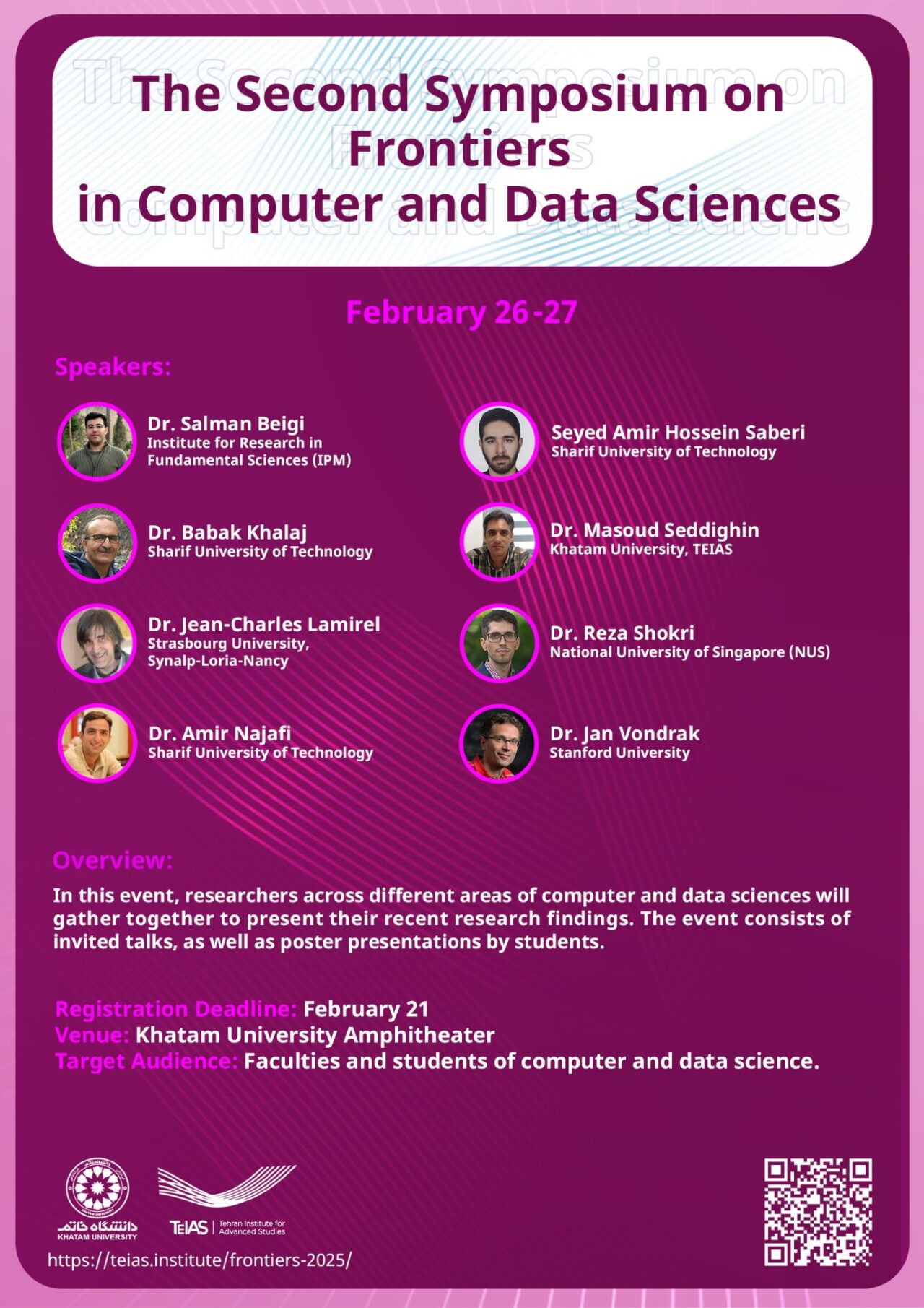

Overview

In this event, researchers across different areas of computer and data sciences will gather together to present their recent research findings. The event consists of invited talks, as well as poster presentations by students.

Target Audience

Faculties and students of computer and data science.

Speakers

Title: Quantum computing: state of the art

Abstract: This talk is a quick review of the main ideas behind the notion of quantum computation. We discuss challenges towards manufacturing a quantum computer and review the state of the art. This talk is not technical and should be accessible for most audiences.

Title: Advanced methods for topic modeling : surprise is in the corner ! Comparison of statistical approaches with LLM and embedding based approaches.

Title: Robust Model Evaluation over Large-scale Federated Networks

Abstract: In this talk, I will briefly introduce the federated learning paradigm—a branch of distributed learning that preserves client privacy—along with beta (A/B) testing over large-scale networks. I will then delve into a specific problem in robust federated model evaluation: Consider a network of clients, each with private, non-IID local datasets governed by an unknown meta-distribution. A central server aims to evaluate the average performance of a given ML model not only on this network (standard beta or A/B testing) but also on all possible unseen networks that are meta-distributionally similar, as measured by either $f$-divergence or Wasserstein distance. To address this, we introduce a novel robust optimization framework that can be implemented in a private and federated manner, with at most polynomial time and query complexity. Specifically, we propose a private server-side aggregation technique for local adversarial risks, which provably enables global robust model evaluation. We also establish asymptotically minimax-optimal bounds for the risk average and cumulative distribution function (CDF), with vanishing generalization gaps as the source network size $K$ grows and the minimum local dataset size exceeds $\mathcal{O}(\log K)$ . Empirical results further validate the effectiveness of these bounds in real-world tasks. This talk is based on a recent joint work with Samin Mahdizadeh (UCLA) and Farzan Farnia (Chinese University of Hong Kong).

Title: Enhancing Machine Learning Generalization Through Distributional Robustness

Abstract: In modern machine learning, generalization beyond observed training data remains a fundamental challenge, particularly in the presence of distribution shifts. Distributionally Robust Learning (DRL) offers a principled approach to addressing this challenge by optimizing for worst-case scenarios within a specified uncertainty set. In this talk, I will present two of our recent works tha leverage DRL to enhance adaptation and generalization. The first work, Gradual Domain Adaptation via Manifold-Constrained Distributionally Robus Optimization, introduces a novel DRL-based framework incorporating manifold constraints to facilitate smooth adaptation across a sequence of shifting domains. This approach ensures stability and improves model robustness in real-world scenarios where data distributions evolve gradually over time. The second work, Out-Of-Domain Unlabeled Data Improves Generalization, challenges the conventional reliance on in-domain data for model training. It is shown that strategically incorporating out-of-domain, unlabeled data under a DRL framework can significantly enhanc performance, even in previously unseen environments. By integrating robust optimization principles, these approaches provide new insights into improving machine learning models in dynamic and uncertain settings. Theoretical foundations, methodological innovations, and empirical results will be discussed to highlight the effectiveness of DRL in addressing real-world challenges.

Title: Recent Advancements in Fair Allocation of Indivisible Goods

Abstract: Fair allocation of indivisible goods is a fundamental problem in social choice and algorithmic game theory, with numerous real-world applications. In this talk, I will survey prominent fairness notions, key challenges, and recent advancements in fair allocation. My central focus will be on maximin share (MMS) fairness, one of the most well-studied criteria that ensures each agent receives a share at least as valuable as what they could guarantee in a symmetric fair division. I will highlight key results, including our recent work on approximating MMS for subadditive valuations.

Title: Adversarial Approaches to Data Tracing in Machine Learning

Abstract: In this talk, I explore tracing information through the stages of the ML pipeline, focusing on the critical requirements for building trustworthy ML systems. From an adversarial perspective, I show how we can systematically analyze model security and privacy using various attack strategies. I address two major challenges: tracing data to a model’s input, which exposes privacy risks with respect to the training data, and tracing data to its output, which demonstrates generated data ownership through watermarking. For the first challenge, I present robust membership inference attacks to quantify memorization and information leakage. For the second, I introduce smoothing attacks to evaluate the robustness of watermarking techniques in large language models. References: https://arxiv.org/abs/2312.03262, https://arxiv.org/abs/2407.14206

Title: Prophet inequality with recourse

Abstract: Prophet inequalities compare the expected outcome of a stopping rule to an optimal solution under complete knowledge of the future. The classical prophet inequality states that for a sequence of nonnegative random variables X_1,…,X_n with known distributions, there is a stopping rule which recovers at least 1/2 of the expected maximum. We consider a setting introduced by Babaioff, Kleinberg and Hartline, where a previously selected random variable X_i can be discarded at a cost of \beta X_i, for some parameter \beta>0. We determine the optimal factor for \beta>1 to be (1+\beta) / (1+2\beta), via combinatorial optimization techniques involving flows and cuts. We also describe the optimal solution for 0<\beta<1 implicitly via a differential equation. Joint work with Farbod Ekbatani, Rad Niazadeh, and Pranav Nuti

Schedule

08:00 – 08:45 Light Refreshment + Registration

08:45 – 09:00 Opening

09:00 – 09:40 Talk: Dr. Reza Shokri

10:00 – 10:45 Talk: Dr. Masoud Seddighin

10:45 – 11:15 Coffee break

11:15 – 12:00 Talk: Dr. Amir Najafi

12:00 – 14:00 Lunch

14:00 – 17:00 Poster presentations and coffee break

08:00 – 09:00 Light Refreshment

09:00 – 09:45 Talk: Dr. Jan Vondrak

10:00 – 10:45 Talk: Dr. Salman Beigi

10:45 – 11:15 Coffee break

11:15 – 12:00 Talk Dr. Jean-Charles Lamirel

12:00 – 14:00 Lunch

14:00 – 14:45 Talk TBA

15:00 – 16:00 Talk Dr. Babak Khalaj – Seyed Amirhossein Saberi

16:00 – 16:15 Coffee break

16:15 – 17:15 Final Ceremony ( with poster awards)